Vec2Text

Reconstructing text sequences from just its embeddings.

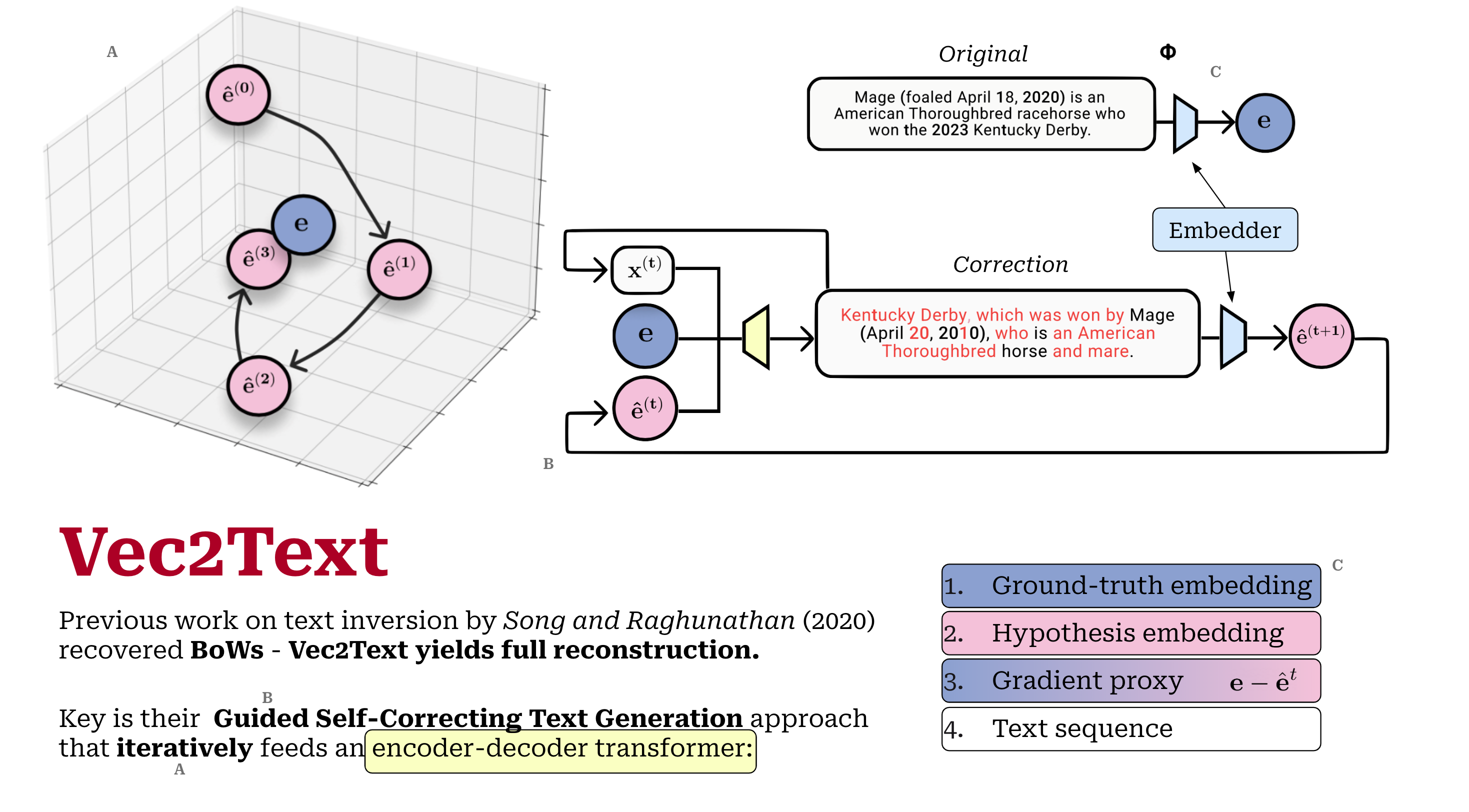

This project reproduces findings from the study "Text Embeddings Reveal (Almost) As Much As Text" (Morris et al., 2023), which highlights privacy risks in text embeddings by demonstrating their potential for reconstructing original text. Using a transformer-based encoder-decoder model with iterative correction, Vec2Text achieves high accuracy in embedding inversion, confirmed by BLEU and Token F1 scores across various datasets. Our analysis validates the original study's claims on in-domain data, explores factors like beam width and sequence length, and assesses computational trade-offs. These findings emphasise the need to balance privacy, performance, and efficiency in text embedding systems.

References

2023

- EMNLPText Embeddings Reveal (Almost) As Much As TextJohn X. Morris, Volodymyr Kuleshov, Vitaly Shmatikov, and 1 more author2023

@misc{morris2023textembeddingsrevealalmost, title = {Text Embeddings Reveal (Almost) As Much As Text}, author = {Morris, John X. and Kuleshov, Volodymyr and Shmatikov, Vitaly and Rush, Alexander M.}, year = {2023}, eprint = {2310.06816}, archiveprefix = {arXiv}, primaryclass = {cs.CL}, url = {https://arxiv.org/abs/2310.06816}, }